A safe space where one feels emotionally connected and heard without judgment is usually with another person. Can a robot make you feel the same? Predicting human behaviour is no more restricted to mental health professionals, it is increasingly being simulated as an AI (artificial intelligence) function too. After the humongous success of internet-based applications, we have entered the phase of attempting emotional connections with AI! In a world where we talk about the digital divide, we are finding comfort in willingly pouring our hearts out to an AI-generated bot. As per John McCarthy, artificial intelligence is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence. In the realm of mental healthcare, synthetic intelligence takes to interfaces: chatbots and robots.

As we traverse the apps of artificial intelligence for mental health, some of the oft-encountered computerese will be that of machine learning (ML), deep learning, natural language processing (NLP) and computer vision. For the tech buzz, IBM clarifies these definitions in a foundational way. In tandem to mental healthcare, machine learning and deep learning have enabled chatbots to diagnose better and predict patient outcomes; computer vision makes understanding the non-verbal cues possible and NLP can simulate human conversations- does that mean that the AI could be your therapist very soon?

A simplified explanation of artificial intelligence can be found here.

In 1950, Alan Turing asked, ‘Can machines think?’ and today, in 2023, we can affirmatively answer this question with confidence. The Turing Test was curated for a human interrogator to attempt to distinguish between a computer and human text response; and it is a cinch to get through with AI nowadays! Is this scary or should we see this as triumphant? What does this have to offer for mental health, especially, in times when we need much more emotional support as the mental health burden on the rise, in consequence to the global pandemic?

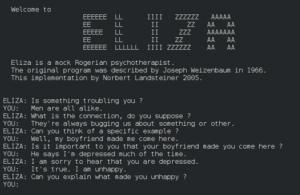

Since the 1960s, scientists and researchers have been attempting to advance and enrich the quality of chatbots, powered by AI and ML, to function as psychotherapists. From ELIZA to ChatGPT, the journey has been towards sophistication of the chatbot, better in-built knowledge, cost effectiveness, quicker access and persistent efforts to make the bot mimic as emphatically as the human therapist! Predominantly, AI applications for mental health care are circumscribed to countries that have digitized systems of working, though, mental health apps are ubiquitously accessible across the globe. In its current form, artificial intelligence is being used to perform several tasks within the mental health treatment realm- NLP enables to transcript the conversations between therapists and clients to maintain quality control over therapists; AI can also keep a track of how much conversation during therapy was constructive versus chit-chat; machine learning and deep learning allow for doctors to personalize treatments, make more precise diagnoses, choose the right treatment framework and also identify variants in diagnostic formulations; and that AI can help validate to choose therapy, for example, cognitive behavioural therapy / CBT conversations, before medication for cases of mild depression and anxiety.

Reckoning, there are chatbots such as Wysa, Woebot, Cogniant and many others that are adept at simulating the cognitive behavioural therapy techniques and procedures to provide mental health support for its users. Chatbots are being viewed to advantageously improve efficiency, affordability, convenience, and patient-driven access with an implicit assumption that this will improve health equity and social inclusion. With more than 20k mental health apps in existence (as of 2021), more people are downloading the apps and pushing the keyboards to talk with chatbots. Thus, with the possible aforementioned functions of AI, there is a huge prospect of destigmatising mental health care. A global survey of workforce employees revealed that 82% people wanted to talk to a robot over a manager about their depression and anxiety. Now this may be promising in times when there is increased need of mental health support, feeling non-judgmental while sharing vulnerabilities with a non-human, sparsely available professionals and long-waiting hours with existing professionals- AI may be emulating a pocket therapist!

However, it is not as straightforward and easy as there are several challenges that come along. Time and again, psychologists and researchers accentuate the fact that robots cannot replace human therapists for several reasons: research is still on-going on the efficacy of robot-delivered therapy; confidentiality and privacy are called into question; issues pertain to poor social and cultural inclusion; and that bots will not be able to empathize like humans till they can only simulate empathy. Though AI has brought a whirlwind of changes in the mental health field, the success quotient is far from conclusive. The trickier concern with AI raises the question that if it can embody consciousness, can an AI assisted robot then be diagnosed with a mental illness too? This is a question of ensuring philosophical inquiry and scientific research.

Human therapists are not just empathetic but also have the superpower to co-regulate, pick up nuanced non-verbal signs and are adept at using humour. We are yet to testify if people would respond just as effectively to a robo-therapist. As an eclectic practitioner, I await to see the day when robots are able to adapt to this approach, fulfilling the diverse needs of clientele in psychotherapeutic practice. Artificial intelligence is changing the world but it is not a substitute for human intelligence, it is a tool to amplify human creativity and ingenuity. If you are befuddled with what to do with AI, just one thing to bear in mind is that to be able to benefit from AI, like any other technology, is to know its merits and demerits along with its judicious use with the right know-how. What and how much is judicious, is a matter of individual choice and comfort. We will not know better unless we try it, and only if we want to!